はじめに

MNISTやっていきます

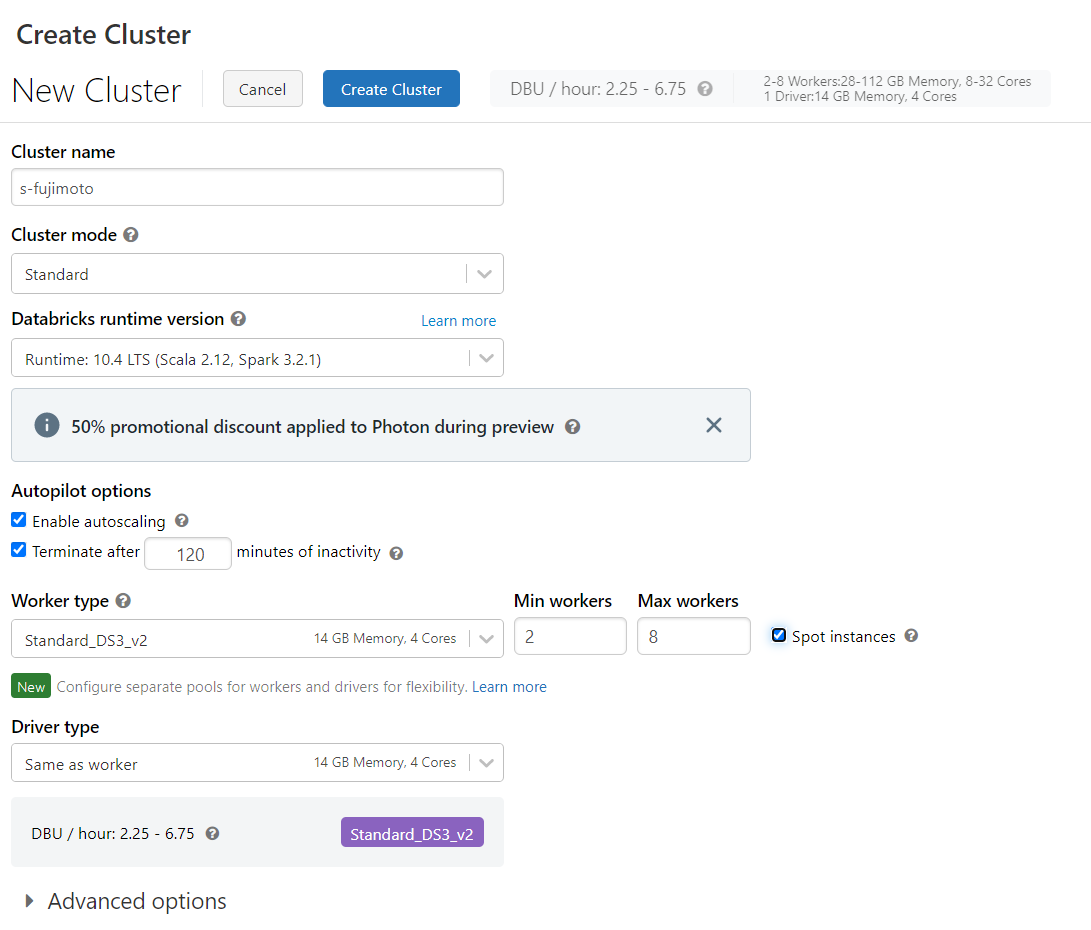

開発環境

mnist-tensorflow-keras

1.こちらのノートブックをやっていきます

https://docs.databricks.com/_static/notebooks/deep-learning/mnist-tensorflow-keras.html

2.ライブラリをインストール

|

1 2 |

%pip install tensorflow |

3.関数を定義

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

def get_dataset(num_classes, rank=0, size=1): from tensorflow import keras (x_train, y_train), (x_test, y_test) = keras.datasets.mnist.load_data('MNIST-data-%d' % rank) x_train = x_train[rank::size] y_train = y_train[rank::size] x_test = x_test[rank::size] y_test = y_test[rank::size] x_train = x_train.reshape(x_train.shape[0], 28, 28, 1) x_test = x_test.reshape(x_test.shape[0], 28, 28, 1) x_train = x_train.astype('float32') x_test = x_test.astype('float32') x_train /= 255 x_test /= 255 y_train = keras.utils.to_categorical(y_train, num_classes) y_test = keras.utils.to_categorical(y_test, num_classes) return (x_train, y_train), (x_test, y_test) |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

def get_model(num_classes): from tensorflow.keras import models from tensorflow.keras import layers model = models.Sequential() model.add(layers.Conv2D(32, kernel_size=(3, 3), activation='relu', input_shape=(28, 28, 1))) model.add(layers.Conv2D(64, (3, 3), activation='relu')) model.add(layers.MaxPooling2D(pool_size=(2, 2))) model.add(layers.Dropout(0.25)) model.add(layers.Flatten()) model.add(layers.Dense(128, activation='relu')) model.add(layers.Dropout(0.5)) model.add(layers.Dense(num_classes, activation='softmax')) return model |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

# Specify training parameters batch_size = 128 epochs = 2 num_classes = 10 def train(learning_rate=1.0): from tensorflow import keras (x_train, y_train), (x_test, y_test) = get_dataset(num_classes) model = get_model(num_classes) # Specify the optimizer (Adadelta in this example), using the learning rate input parameter of the function so that Horovod can adjust the learning rate during training optimizer = keras.optimizers.Adadelta(lr=learning_rate) model.compile(optimizer=optimizer, loss='categorical_crossentropy', metrics=['accuracy']) model.fit(x_train, y_train, batch_size=batch_size, epochs=epochs, verbose=2, validation_data=(x_test, y_test)) return model |

4.学習

|

1 2 |

model = train(learning_rate=0.1) |

|

1 2 3 4 5 6 7 8 9 10 |

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/mnist.npz 11493376/11490434 [==============================] - 0s 0us/step 11501568/11490434 [==============================] - 0s 0us/step /local_disk0/.ephemeral_nfs/envs/pythonEnv-107bf0ee-693b-4dea-8790-78f3dc70991f/lib/python3.8/site-packages/keras/optimizer_v2/adadelta.py:74: UserWarning: The `lr` argument is deprecated, use `learning_rate` instead. super(Adadelta, self).__init__(name, **kwargs) Epoch 1/2 469/469 - 64s - loss: 0.6030 - accuracy: 0.8152 - val_loss: 0.2076 - val_accuracy: 0.9394 - 64s/epoch - 137ms/step Epoch 2/2 469/469 - 64s - loss: 0.2775 - accuracy: 0.9175 - val_loss: 0.1417 - val_accuracy: 0.9584 - 64s/epoch - 136ms/step |

5.モデル評価

|

1 2 3 4 5 |

_, (x_test, y_test) = get_dataset(num_classes) loss, accuracy = model.evaluate(x_test, y_test, batch_size=128) print("loss:", loss) print("accuracy:", accuracy) |

|

1 2 3 4 |

79/79 [==============================] - 2s 29ms/step - loss: 0.1417 - accuracy: 0.9584 loss: 0.1416797637939453 accuracy: 0.9584000110626221 |

6.HorovodRunnerを用いた学習

|

1 2 3 4 5 6 7 8 9 10 11 |

import os import time # Remove any existing checkpoint files dbutils.fs.rm(("/ml/MNISTDemo/train"), recurse=True) # Create directory checkpoint_dir = '/dbfs/ml/MNISTDemo/train/{}/'.format(time.time()) os.makedirs(checkpoint_dir) print(checkpoint_dir) |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 |

def train_hvd(checkpoint_path, learning_rate=1.0): # Import tensorflow modules to each worker from tensorflow.keras import backend as K from tensorflow.keras.models import Sequential import tensorflow as tf from tensorflow import keras import horovod.tensorflow.keras as hvd # Initialize Horovod hvd.init() # Pin GPU to be used to process local rank (one GPU per process) # These steps are skipped on a CPU cluster gpus = tf.config.experimental.list_physical_devices('GPU') for gpu in gpus: tf.config.experimental.set_memory_growth(gpu, True) if gpus: tf.config.experimental.set_visible_devices(gpus[hvd.local_rank()], 'GPU') # Call the get_dataset function you created, this time with the Horovod rank and size (x_train, y_train), (x_test, y_test) = get_dataset(num_classes, hvd.rank(), hvd.size()) model = get_model(num_classes) # Adjust learning rate based on number of GPUs optimizer = keras.optimizers.Adadelta(lr=learning_rate * hvd.size()) # Use the Horovod Distributed Optimizer optimizer = hvd.DistributedOptimizer(optimizer) model.compile(optimizer=optimizer, loss='categorical_crossentropy', metrics=['accuracy']) # Create a callback to broadcast the initial variable states from rank 0 to all other processes. # This is required to ensure consistent initialization of all workers when training is started with random weights or restored from a checkpoint. callbacks = [ hvd.callbacks.BroadcastGlobalVariablesCallback(0), ] # Save checkpoints only on worker 0 to prevent conflicts between workers if hvd.rank() == 0: callbacks.append(keras.callbacks.ModelCheckpoint(checkpoint_path, save_weights_only = True)) model.fit(x_train, y_train, batch_size=batch_size, callbacks=callbacks, epochs=epochs, verbose=2, validation_data=(x_test, y_test)) |

sparkdlをインストール

|

1 2 |

%pip install sparkdl %pip install tensorframes |

|

1 2 3 4 5 6 7 |

from sparkdl import HorovodRunner checkpoint_path = checkpoint_dir + '/checkpoint-{epoch}.ckpt' learning_rate = 0.1 hr = HorovodRunner(np=2) hr.run(train_hvd, checkpoint_path=checkpoint_path, learning_rate=learning_rate) |

エラー

|

1 2 |

ImportError: cannot import name 'resnet50' from 'keras.applications' (/local_disk0/.ephemeral_nfs/envs/pythonEnv-107bf0ee-693b-4dea-8790-78f3dc70991f/lib/python3.8/site-packages/keras/applications/__init__.py) |

お疲れ様でした。

mnist-pytorch

Azure Databricksの導入ならナレコムにおまかせください。

導入から活用方法までサポートします。お気軽にご相談ください。